Can Python Cancel the Read Command With Threading

Watch Now This tutorial has a related video course created by the Real Python squad. Watch information technology together with the written tutorial to deepen your agreement: Threading in Python

Python threading allows you to take different parts of your plan run concurrently and tin simplify your blueprint. If you've got some experience in Python and want to speed upwardly your plan using threads, then this tutorial is for you!

In this article, you'll learn:

- What threads are

- How to create threads and wait for them to finish

- How to employ a

ThreadPoolExecutor - How to avert race conditions

- How to use the common tools that Python

threadingprovides

This commodity assumes you've got the Python basics down pat and that y'all're using at least version three.6 to run the examples. If you need a refresher, you can get-go with the Python Learning Paths and get upwardly to speed.

If yous're not sure if you want to utilize Python threading, asyncio, or multiprocessing, then you tin cheque out Speed Upwards Your Python Program With Concurrency.

All of the sources used in this tutorial are bachelor to you in the Existent Python GitHub repo.

What Is a Thread?

A thread is a separate flow of execution. This means that your program volition take two things happening at one time. Merely for most Python 3 implementations the different threads do not actually execute at the aforementioned fourth dimension: they merely appear to.

It'due south tempting to call back of threading as having two (or more than) different processors running on your program, each ane doing an independent chore at the same time. That'due south almost right. The threads may be running on different processors, but they will only be running 1 at a time.

Getting multiple tasks running simultaneously requires a not-standard implementation of Python, writing some of your code in a dissimilar language, or using multiprocessing which comes with some extra overhead.

Considering of the mode CPython implementation of Python works, threading may not speed up all tasks. This is due to interactions with the GIL that essentially limit one Python thread to run at a fourth dimension.

Tasks that spend much of their time waiting for external events are more often than not good candidates for threading. Bug that require heavy CPU computation and spend little time waiting for external events might not run faster at all.

This is true for code written in Python and running on the standard CPython implementation. If your threads are written in C they have the ability to release the GIL and run concurrently. If you are running on a different Python implementation, check with the documentation also run into how it handles threads.

If you are running a standard Python implementation, writing in merely Python, and have a CPU-spring problem, you should bank check out the multiprocessing module instead.

Architecting your program to use threading tin can also provide gains in blueprint clarity. Almost of the examples y'all'll learn about in this tutorial are non necessarily going to run faster considering they use threads. Using threading in them helps to make the pattern cleaner and easier to reason near.

And so, let's stop talking about threading and outset using it!

Starting a Thread

Now that you've got an idea of what a thread is, let's larn how to brand one. The Python standard library provides threading, which contains about of the primitives you'll run across in this commodity. Thread, in this module, nicely encapsulates threads, providing a clean interface to work with them.

To start a split up thread, yous create a Thread instance then tell information technology to .start():

1 import logging two import threading 3 import time iv 5 def thread_function ( proper noun ): half-dozen logging . info ( "Thread %southward : starting" , name ) 7 fourth dimension . sleep ( two ) eight logging . info ( "Thread %s : finishing" , name ) 9 ten if __name__ == "__main__" : 11 format = " %(asctime)south : %(bulletin)s " 12 logging . basicConfig ( format = format , level = logging . INFO , 13 datefmt = "%H:%M:%South" ) 14 15 logging . info ( "Master : before creating thread" ) xvi ten = threading . Thread ( target = thread_function , args = ( 1 ,)) 17 logging . info ( "Main : before running thread" ) xviii 10 . start () 19 logging . info ( "Principal : await for the thread to end" ) xx # ten.join() 21 logging . info ( "Main : all done" ) If you wait effectually the logging statements, you tin can encounter that the main section is creating and starting the thread:

x = threading . Thread ( target = thread_function , args = ( 1 ,)) ten . start () When you create a Thread, yous pass information technology a function and a list containing the arguments to that function. In this case, you're telling the Thread to run thread_function() and to pass it one every bit an argument.

For this article, you'll use sequential integers as names for your threads. There is threading.get_ident(), which returns a unique proper noun for each thread, just these are usually neither brusk nor easily readable.

thread_function() itself doesn't practice much. Information technology simply logs some messages with a time.sleep() in between them.

When you run this programme as it is (with line twenty commented out), the output will look like this:

$ ./single_thread.py Main : before creating thread Chief : before running thread Thread 1: starting Main : wait for the thread to finish Main : all done Thread 1: finishing You lot'll notice that the Thread finished after the Main section of your code did. You'll come up dorsum to why that is and talk nearly the mysterious line twenty in the side by side department.

Daemon Threads

In information science, a daemon is a process that runs in the background.

Python threading has a more than specific meaning for daemon. A daemon thread will shut down immediately when the program exits. One manner to think nearly these definitions is to consider the daemon thread a thread that runs in the background without worrying about shutting it down.

If a program is running Threads that are not daemons, then the program will look for those threads to consummate before it terminates. Threads that are daemons, nevertheless, are just killed wherever they are when the program is exiting.

Let'southward look a little more than closely at the output of your programme above. The last two lines are the interesting bit. When you run the programme, you'll notice that there is a pause (of about ii seconds) after __main__ has printed its all done bulletin and earlier the thread is finished.

This suspension is Python waiting for the non-daemonic thread to complete. When your Python plan ends, part of the shutdown process is to clean upward the threading routine.

If you await at the source for Python threading, you'll see that threading._shutdown() walks through all of the running threads and calls .join() on every ane that does non accept the daemon flag set.

So your program waits to exit considering the thread itself is waiting in a sleep. As soon equally it has completed and printed the message, .join() volition render and the programme tin exit.

Frequently, this behavior is what you want, but there are other options available to us. Allow's first repeat the program with a daemon thread. You do that past changing how yous construct the Thread, calculation the daemon=True flag:

x = threading . Thread ( target = thread_function , args = ( i ,), daemon = True ) When you run the program now, you should encounter this output:

$ ./daemon_thread.py Main : before creating thread Principal : before running thread Thread ane: starting Main : expect for the thread to finish Main : all washed The difference hither is that the final line of the output is missing. thread_function() did not get a chance to complete. It was a daemon thread, and then when __main__ reached the terminate of its code and the program wanted to finish, the daemon was killed.

bring together() a Thread

Daemon threads are handy, but what about when you want to wait for a thread to stop? What near when you want to practise that and not leave your program? Now permit's go dorsum to your original program and expect at that commented out line 20:

To tell one thread to wait for another thread to end, you telephone call .bring together(). If you uncomment that line, the main thread will pause and wait for the thread x to complete running.

Did yous test this on the code with the daemon thread or the regular thread? It turns out that information technology doesn't matter. If yous .bring together() a thread, that statement will expect until either kind of thread is finished.

Working With Many Threads

The example lawmaking so far has only been working with 2 threads: the main thread and ane you lot started with the threading.Thread object.

Often, you'll want to starting time a number of threads and have them do interesting work. Permit's starting time by looking at the harder way of doing that, and then you'll motion on to an easier method.

The harder style of starting multiple threads is the 1 you already know:

import logging import threading import fourth dimension def thread_function ( name ): logging . info ( "Thread %s : starting" , proper noun ) time . sleep ( two ) logging . info ( "Thread %south : finishing" , proper name ) if __name__ == "__main__" : format = " %(asctime)south : %(bulletin)southward " logging . basicConfig ( format = format , level = logging . INFO , datefmt = "%H:%M:%S" ) threads = list () for alphabetize in range ( 3 ): logging . info ( "Principal : create and starting time thread %d ." , alphabetize ) x = threading . Thread ( target = thread_function , args = ( index ,)) threads . append ( x ) x . get-go () for alphabetize , thread in enumerate ( threads ): logging . info ( "Main : before joining thread %d ." , index ) thread . bring together () logging . info ( "Main : thread %d done" , index ) This code uses the aforementioned mechanism you saw in a higher place to get-go a thread, create a Thread object, and and then call .start(). The program keeps a list of Thread objects and then that it can so await for them later on using .bring together().

Running this lawmaking multiple times will probable produce some interesting results. Here's an example output from my motorcar:

$ ./multiple_threads.py Main : create and showtime thread 0. Thread 0: starting Principal : create and start thread 1. Thread 1: starting Main : create and offset thread two. Thread 2: starting Main : before joining thread 0. Thread ii: finishing Thread 1: finishing Thread 0: finishing Main : thread 0 done Main : before joining thread i. Main : thread one done Main : before joining thread two. Main : thread ii done If y'all walk through the output carefully, y'all'll run across all 3 threads getting started in the order you might await, only in this case they finish in the opposite social club! Multiple runs will produce different orderings. Look for the Thread x: finishing message to tell you when each thread is done.

The society in which threads are run is determined by the operating organization and can be quite hard to predict. It may (and likely will) vary from run to run, so you need to be enlightened of that when you lot design algorithms that use threading.

Fortunately, Python gives yous several primitives that yous'll expect at subsequently to help coordinate threads and go them running together. Earlier that, let's look at how to make managing a group of threads a flake easier.

Using a ThreadPoolExecutor

At that place's an easier way to showtime up a grouping of threads than the one you saw above. It'southward called a ThreadPoolExecutor, and it's role of the standard library in concurrent.futures (as of Python 3.two).

The easiest way to create it is equally a context director, using the with argument to manage the creation and devastation of the puddle.

Hither's the __main__ from the last example rewritten to employ a ThreadPoolExecutor:

import concurrent.futures # [rest of code] if __name__ == "__main__" : format = " %(asctime)southward : %(message)s " logging . basicConfig ( format = format , level = logging . INFO , datefmt = "%H:%K:%S" ) with concurrent . futures . ThreadPoolExecutor ( max_workers = 3 ) as executor : executor . map ( thread_function , range ( iii )) The lawmaking creates a ThreadPoolExecutor as a context manager, telling it how many worker threads it wants in the puddle. Information technology so uses .map() to step through an iterable of things, in your example range(3), passing each one to a thread in the pool.

The finish of the with block causes the ThreadPoolExecutor to do a .join() on each of the threads in the pool. It is strongly recommended that you apply ThreadPoolExecutor equally a context manager when y'all can so that you never forget to .join() the threads.

Running your corrected example lawmaking will produce output that looks like this:

$ ./executor.py Thread 0: starting Thread 1: starting Thread two: starting Thread i: finishing Thread 0: finishing Thread 2: finishing Again, notice how Thread 1 finished before Thread 0. The scheduling of threads is done past the operating arrangement and does not follow a program that's easy to figure out.

Race Conditions

Before y'all move on to some of the other features tucked away in Python threading, let's talk a bit nigh one of the more difficult problems y'all'll run into when writing threaded programs: race weather condition.

Once you've seen what a race condition is and looked at one happening, y'all'll move on to some of the primitives provided past the standard library to prevent race conditions from happening.

Race conditions tin occur when two or more threads admission a shared piece of information or resource. In this example, you're going to create a large race status that happens every fourth dimension, but exist aware that nigh race conditions are not this obvious. Frequently, they just occur rarely, and they tin can produce disruptive results. As yous can imagine, this makes them quite difficult to debug.

Fortunately, this race condition volition happen every time, and you'll walk through it in detail to explain what is happening.

For this case, you lot're going to write a class that updates a database. Okay, you're not really going to have a database: you're only going to fake it, because that's not the indicate of this article.

Your FakeDatabase volition have .__init__() and .update() methods:

class FakeDatabase : def __init__ ( cocky ): self . value = 0 def update ( self , name ): logging . info ( "Thread %southward : starting update" , name ) local_copy = self . value local_copy += 1 fourth dimension . slumber ( 0.i ) self . value = local_copy logging . info ( "Thread %south : finishing update" , proper name ) FakeDatabase is keeping runway of a single number: .value. This is going to exist the shared information on which you'll meet the race condition.

.__init__() simply initializes .value to goose egg. So far, then skillful.

.update() looks a picayune foreign. It's simulating reading a value from a database, doing some computation on it, then writing a new value back to the database.

In this instance, reading from the database just means copying .value to a local variable. The computation is just to add one to the value and then .sleep() for a niggling bit. Finally, it writes the value back by copying the local value back to .value.

Here's how you'll use this FakeDatabase:

if __name__ == "__main__" : format = " %(asctime)s : %(bulletin)s " logging . basicConfig ( format = format , level = logging . INFO , datefmt = "%H:%M:%South" ) database = FakeDatabase () logging . info ( "Testing update. Starting value is %d ." , database . value ) with concurrent . futures . ThreadPoolExecutor ( max_workers = 2 ) as executor : for index in range ( 2 ): executor . submit ( database . update , index ) logging . info ( "Testing update. Catastrophe value is %d ." , database . value ) The program creates a ThreadPoolExecutor with two threads and then calls .submit() on each of them, telling them to run database.update().

.submit() has a signature that allows both positional and named arguments to be passed to the function running in the thread:

. submit ( office , * args , ** kwargs ) In the usage above, index is passed as the starting time and only positional argument to database.update(). You'll meet later in this commodity where yous tin laissez passer multiple arguments in a like fashion.

Since each thread runs .update(), and .update() adds ane to .value, yous might expect database.value to exist 2 when it's printed out at the finish. But you wouldn't be looking at this case if that was the example. If y'all run the above code, the output looks like this:

$ ./racecond.py Testing unlocked update. Starting value is 0. Thread 0: starting update Thread one: starting update Thread 0: finishing update Thread 1: finishing update Testing unlocked update. Ending value is one. You might have expected that to happen, but allow'southward look at the details of what'south actually going on here, as that will make the solution to this problem easier to empathise.

1 Thread

Before you dive into this issue with two threads, let's stride back and talk a flake about some details of how threads work.

You won't be diving into all of the details hither, as that's not important at this level. We'll too exist simplifying a few things in a mode that won't be technically accurate but volition give yous the right idea of what is happening.

When yous tell your ThreadPoolExecutor to run each thread, you tell information technology which function to run and what parameters to pass to information technology: executor.submit(database.update, alphabetize).

The effect of this is that each of the threads in the pool volition call database.update(index). Annotation that database is a reference to the 1 FakeDatabase object created in __main__. Calling .update() on that object calls an example method on that object.

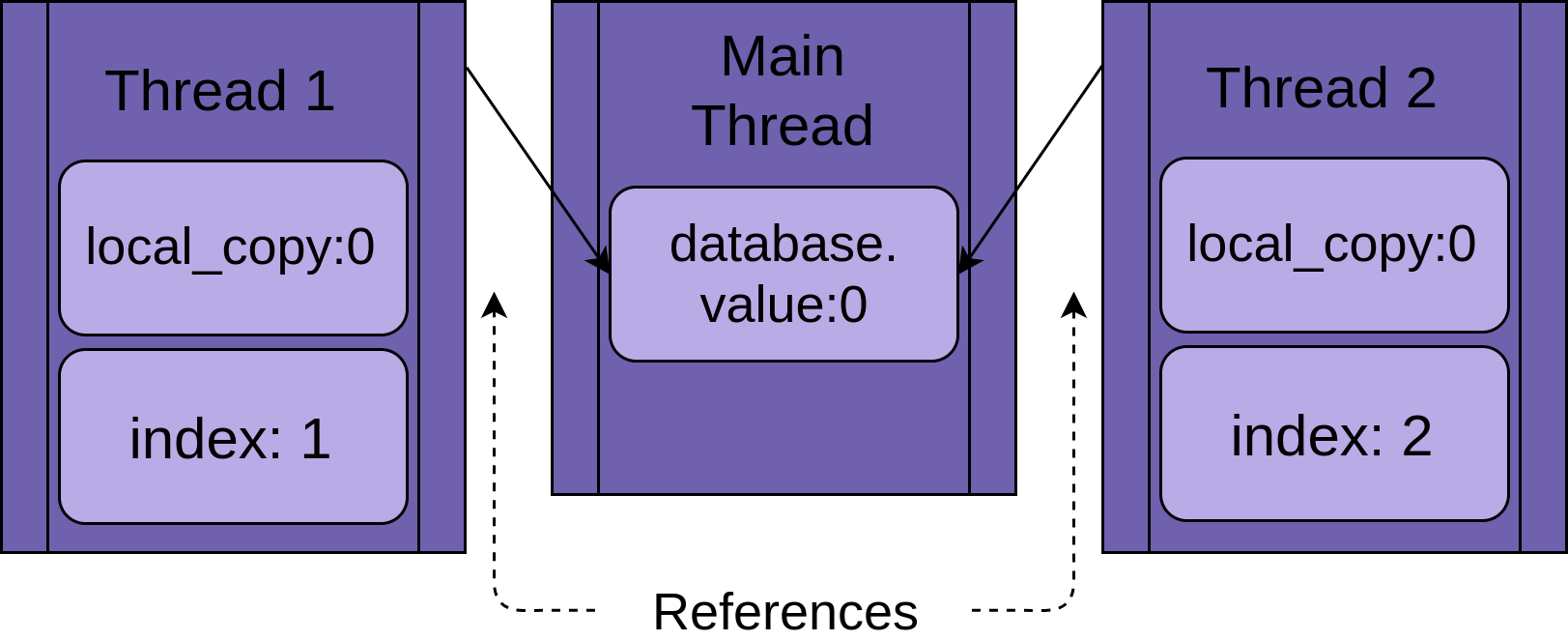

Each thread is going to have a reference to the same FakeDatabase object, database. Each thread will also have a unique value, index, to make the logging statements a bit easier to read:

When the thread starts running .update(), it has its own version of all of the information local to the function. In the case of .update(), this is local_copy. This is definitely a expert thing. Otherwise, two threads running the same function would always misfile each other. It means that all variables that are scoped (or local) to a function are thread-safe.

Now you tin can commencement walking through what happens if you run the program above with a unmarried thread and a single phone call to .update().

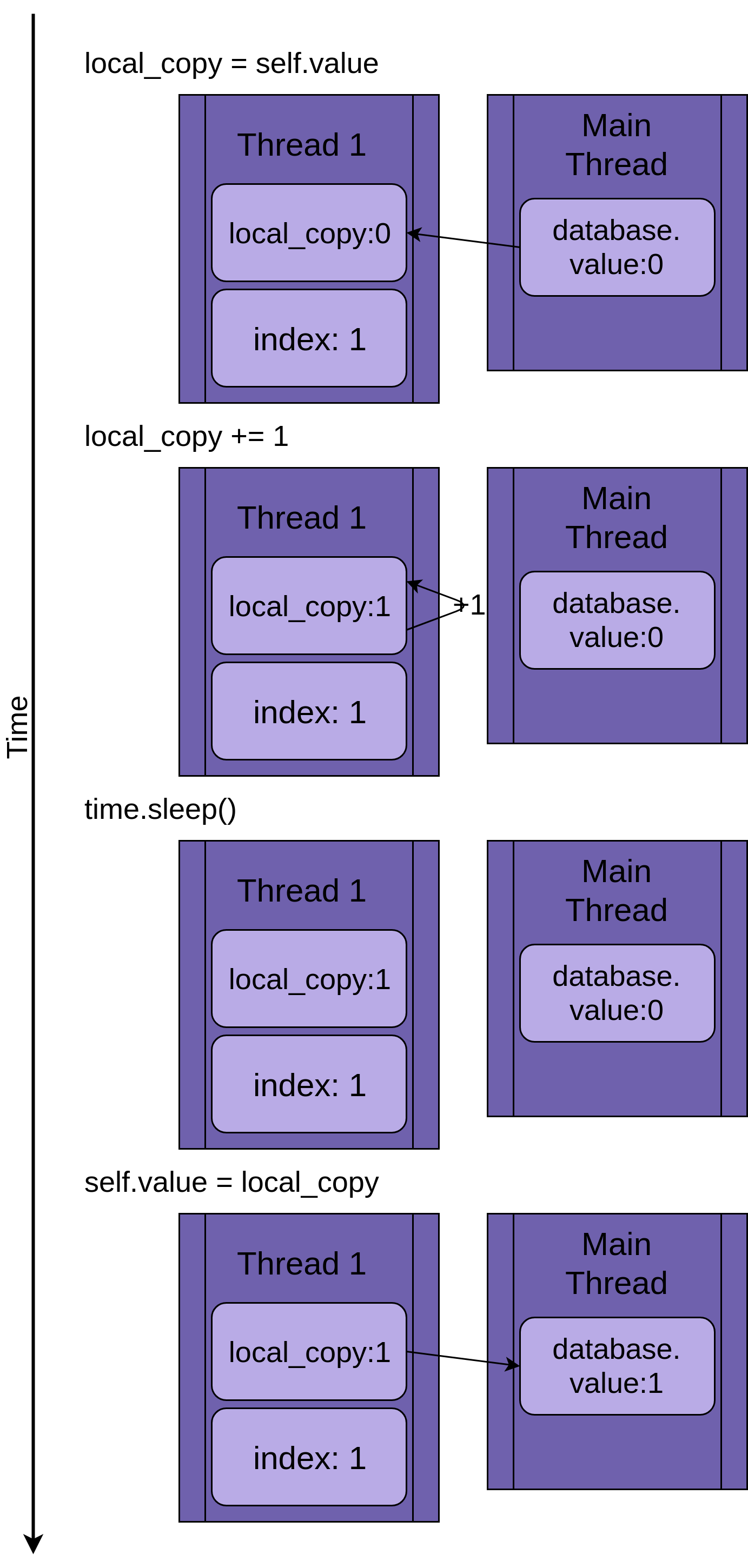

The image below steps through the execution of .update() if only a single thread is run. The statement is shown on the left followed by a diagram showing the values in the thread's local_copy and the shared database.value:

The diagram is laid out so that time increases every bit y'all move from height to bottom. It begins when Thread 1 is created and ends when information technology is terminated.

When Thread 1 starts, FakeDatabase.value is zip. The get-go line of code in the method, local_copy = cocky.value, copies the value nada to the local variable. Next it increments the value of local_copy with the local_copy += i argument. You tin can encounter .value in Thread 1 getting fix to one.

Next time.sleep() is called, which makes the current thread interruption and allows other threads to run. Since there is simply one thread in this case, this has no effect.

When Thread 1 wakes upward and continues, it copies the new value from local_copy to FakeDatabase.value, and then the thread is complete. Y'all tin see that database.value is set up to one.

Then far, so good. Y'all ran .update() once and FakeDatabase.value was incremented to one.

2 Threads

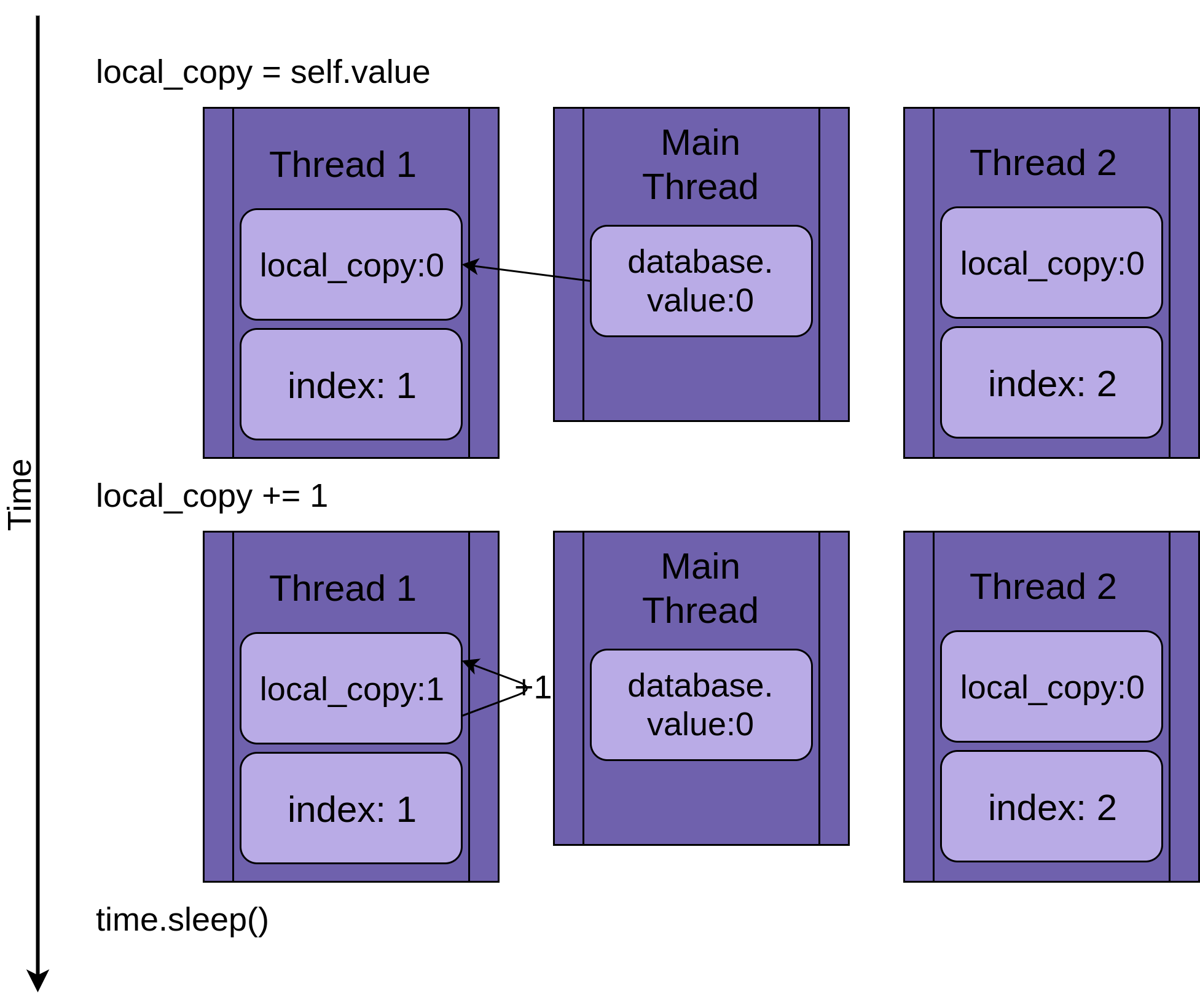

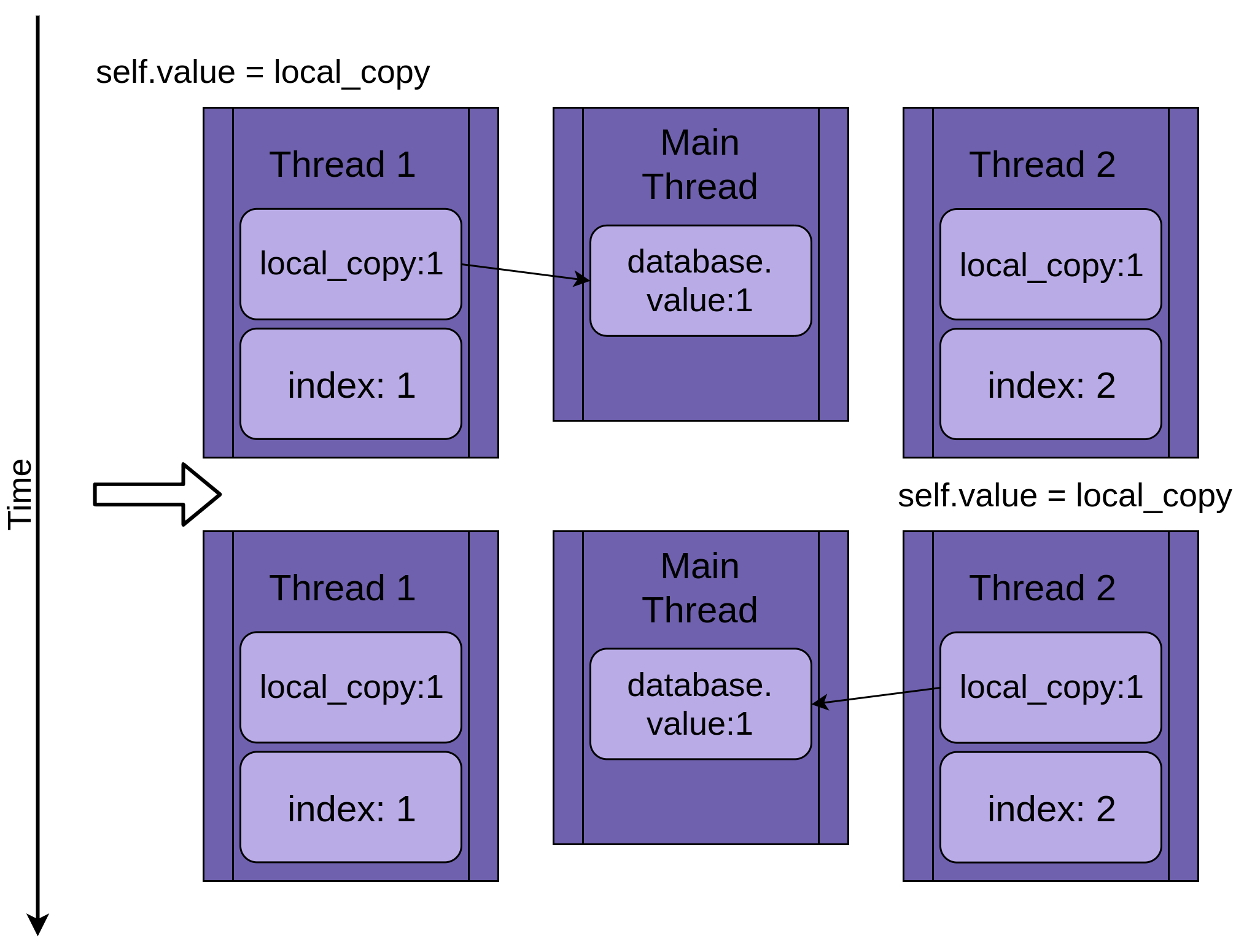

Getting dorsum to the race status, the two threads will be running concurrently but not at the same time. They will each accept their own version of local_copy and will each betoken to the same database. It is this shared database object that is going to crusade the issues.

The program starts with Thread ane running .update():

When Thread 1 calls time.sleep(), information technology allows the other thread to beginning running. This is where things get interesting.

Thread two starts upwards and does the same operations. It'due south likewise copying database.value into its private local_copy, and this shared database.value has non yet been updated:

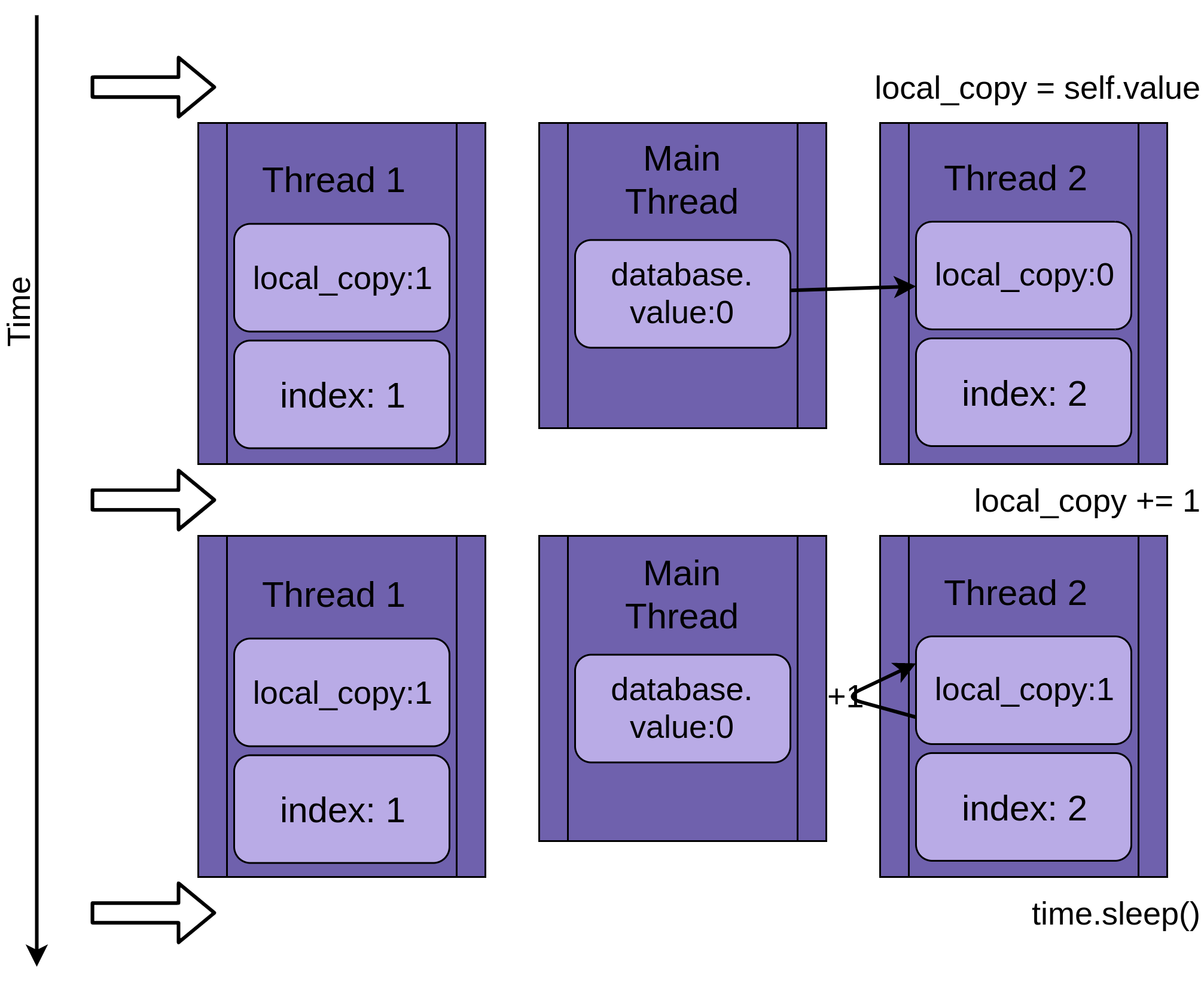

When Thread 2 finally goes to sleep, the shared database.value is all the same unmodified at zip, and both individual versions of local_copy have the value one.

Thread 1 now wakes up and saves its version of local_copy and then terminates, giving Thread two a final chance to run. Thread 2 has no idea that Thread i ran and updated database.value while it was sleeping. It stores its version of local_copy into database.value, also setting it to one:

The two threads have interleaving access to a single shared object, overwriting each other's results. Similar race weather can arise when one thread frees memory or closes a file handle before the other thread is finished accessing it.

Why This Isn't a Dizzy Case

The example above is contrived to make sure that the race condition happens every fourth dimension you run your program. Because the operating arrangement can swap out a thread at any time, information technology is possible to interrupt a statement like ten = x + 1 after it has read the value of x but before it has written back the incremented value.

The details of how this happens are quite interesting, but not needed for the rest of this commodity, and so feel free to skip over this hidden department.

The code to a higher place isn't quite as out there as you lot might originally take idea. It was designed to force a race condition every fourth dimension you run information technology, only that makes information technology much easier to solve than most race conditions.

In that location are two things to go along in mind when thinking about race conditions:

-

Fifty-fifty an operation like

ten += itakes the processor many steps. Each of these steps is a separate educational activity to the processor. -

The operating organisation can swap which thread is running at any time. A thread can be swapped out after any of these pocket-sized instructions. This means that a thread tin exist put to sleep to permit some other thread run in the middle of a Python statement.

Let'south look at this in detail. The REPL beneath shows a role that takes a parameter and increments it:

>>>

>>> def inc ( x ): ... 10 += one ... >>> import dis >>> dis . dis ( inc ) two 0 LOAD_FAST 0 (x) 2 LOAD_CONST 1 (i) 4 INPLACE_ADD half dozen STORE_FAST 0 (x) eight LOAD_CONST 0 (None) 10 RETURN_VALUE The REPL case uses dis from the Python standard library to evidence the smaller steps that the processor does to implement your function. It does a LOAD_FAST of the data value x, it does a LOAD_CONST 1, and and so information technology uses the INPLACE_ADD to add together those values together.

We're stopping hither for a specific reason. This is the point in .update() in a higher place where time.sleep() forced the threads to switch. It is entirely possible that, every one time in while, the operating system would switch threads at that exact bespeak fifty-fifty without slumber(), but the phone call to sleep() makes it happen every time.

As y'all learned above, the operating system tin swap threads at whatsoever time. You lot've walked down this listing to the statement marked 4. If the operating organization swaps out this thread and runs a different thread that also modifies 10, then when this thread resumes, information technology will overwrite x with an wrong value.

Technically, this case won't have a race status because x is local to inc(). It does illustrate how a thread can be interrupted during a single Python operation, nonetheless. The same LOAD, Alter, Store ready of operations also happens on global and shared values. You can explore with the dis module and testify that yourself.

It's rare to get a race condition like this to occur, merely remember that an infrequent event taken over millions of iterations becomes likely to happen. The rarity of these race conditions makes them much, much harder to debug than regular bugs.

Now dorsum to your regularly scheduled tutorial!

Now that you've seen a race condition in activity, let's find out how to solve them!

Basic Synchronization Using Lock

In that location are a number of ways to avoid or solve race conditions. You won't look at all of them here, but in that location are a couple that are used frequently. Let'south get-go with Lock.

To solve your race condition above, yous need to find a style to allow only one thread at a time into the read-modify-write section of your code. The nearly common style to do this is called Lock in Python. In some other languages this same idea is called a mutex. Mutex comes from MUTual EXclusion, which is exactly what a Lock does.

A Lock is an object that acts similar a hall pass. But one thread at a fourth dimension can accept the Lock. Whatever other thread that wants the Lock must wait until the possessor of the Lock gives it up.

The basic functions to do this are .learn() and .release(). A thread will call my_lock.acquire() to become the lock. If the lock is already held, the calling thread volition wait until it is released. There'south an of import betoken hither. If one thread gets the lock just never gives it dorsum, your plan will be stuck. You'll read more about this later.

Fortunately, Python's Lock will as well operate as a context manager, so you tin can use it in a with argument, and it gets released automatically when the with cake exits for whatsoever reason.

Permit's wait at the FakeDatabase with a Lock added to it. The calling part stays the same:

class FakeDatabase : def __init__ ( self ): self . value = 0 cocky . _lock = threading . Lock () def locked_update ( self , proper noun ): logging . info ( "Thread %south : starting update" , proper name ) logging . debug ( "Thread %s nearly to lock" , name ) with self . _lock : logging . debug ( "Thread %s has lock" , name ) local_copy = self . value local_copy += 1 time . sleep ( 0.1 ) self . value = local_copy logging . debug ( "Thread %s about to release lock" , proper name ) logging . debug ( "Thread %south after release" , name ) logging . info ( "Thread %s : finishing update" , name ) Other than adding a agglomeration of debug logging so you can see the locking more clearly, the large change here is to add a member called ._lock, which is a threading.Lock() object. This ._lock is initialized in the unlocked state and locked and released by the with statement.

It'due south worth noting here that the thread running this function will hold on to that Lock until it is completely finished updating the database. In this case, that means it volition hold the Lock while it copies, updates, sleeps, and and then writes the value dorsum to the database.

If you lot run this version with logging set to warning level, you'll see this:

$ ./fixrace.py Testing locked update. Starting value is 0. Thread 0: starting update Thread 1: starting update Thread 0: finishing update Thread ane: finishing update Testing locked update. Ending value is 2. Look at that. Your program finally works!

You lot tin turn on full logging past setting the level to DEBUG past adding this statement after you configure the logging output in __main__:

logging . getLogger () . setLevel ( logging . DEBUG ) Running this program with DEBUG logging turned on looks similar this:

$ ./fixrace.py Testing locked update. Starting value is 0. Thread 0: starting update Thread 0 about to lock Thread 0 has lock Thread 1: starting update Thread 1 nearly to lock Thread 0 about to release lock Thread 0 afterward release Thread 0: finishing update Thread 1 has lock Thread 1 about to release lock Thread 1 afterwards release Thread 1: finishing update Testing locked update. Catastrophe value is 2. In this output you lot can see Thread 0 acquires the lock and is withal holding it when it goes to sleep. Thread i then starts and attempts to larn the same lock. Because Thread 0 is nonetheless holding information technology, Thread 1 has to look. This is the mutual exclusion that a Lock provides.

Many of the examples in the rest of this commodity will have WARNING and DEBUG level logging. Nosotros'll by and large only prove the Alert level output, as the DEBUG logs can be quite lengthy. Try out the programs with the logging turned up and run into what they do.

Deadlock

Before you movement on, you should expect at a common problem when using Locks. Every bit you saw, if the Lock has already been acquired, a second call to .acquire() will expect until the thread that is holding the Lock calls .release(). What do you retrieve happens when you run this code:

import threading l = threading . Lock () print ( "before first acquire" ) l . acquire () print ( "before second acquire" ) l . acquire () impress ( "acquired lock twice" ) When the program calls l.larn() the second time, it hangs waiting for the Lock to be released. In this example, you lot can set up the deadlock by removing the second phone call, but deadlocks commonly happen from one of two subtle things:

- An implementation bug where a

Lockis not released properly - A design issue where a utility part needs to exist called by functions that might or might not already have the

Lock

The first state of affairs happens sometimes, but using a Lock as a context manager profoundly reduces how oftentimes. It is recommended to write code whenever possible to make utilise of context managers, as they assistance to avoid situations where an exception skips you over the .release() telephone call.

The design issue can be a fleck trickier in some languages. Thankfully, Python threading has a 2nd object, called RLock, that is designed for merely this situation. It allows a thread to .acquire() an RLock multiple times before it calls .release(). That thread is still required to call .release() the aforementioned number of times it called .larn(), but it should exist doing that anyhow.

Lock and RLock are two of the basic tools used in threaded programming to prevent race weather. There are a few other that work in different ways. Before y'all wait at them, let'due south shift to a slightly different trouble domain.

Producer-Consumer Threading

The Producer-Consumer Trouble is a standard computer science trouble used to look at threading or procedure synchronization problems. Y'all're going to look at a variant of it to go some ideas of what primitives the Python threading module provides.

For this case, you're going to imagine a program that needs to read messages from a network and write them to disk. The program does non request a bulletin when information technology wants. It must be listening and take messages every bit they come in. The messages will non come in at a regular step, but will exist coming in bursts. This function of the programme is called the producer.

On the other side, once you have a message, you need to write information technology to a database. The database access is tiresome, but fast plenty to proceed up to the average footstep of letters. It is not fast enough to keep up when a burst of messages comes in. This part is the consumer.

In betwixt the producer and the consumer, y'all will create a Pipeline that volition be the role that changes equally yous learn about unlike synchronization objects.

That's the bones layout. Let'south look at a solution using Lock. Information technology doesn't piece of work perfectly, but it uses tools you already know, so it'south a good place to start.

Producer-Consumer Using Lock

Since this is an article about Python threading, and since you but read virtually the Lock primitive, permit's effort to solve this problem with 2 threads using a Lock or two.

The full general blueprint is that there is a producer thread that reads from the simulated network and puts the bulletin into a Pipeline:

import random SENTINEL = object () def producer ( pipeline ): """Pretend we're getting a message from the network.""" for index in range ( 10 ): bulletin = random . randint ( 1 , 101 ) logging . info ( "Producer got message: %south " , bulletin ) pipeline . set_message ( bulletin , "Producer" ) # Send a sentinel message to tell consumer we're done pipeline . set_message ( Picket , "Producer" ) To generate a fake message, the producer gets a random number betwixt ane and one hundred. It calls .set_message() on the pipeline to ship it to the consumer.

The producer also uses a Picket value to bespeak the consumer to stop afterwards information technology has sent ten values. This is a little awkward, but don't worry, you'll see means to get rid of this SENTINEL value after you work through this example.

On the other side of the pipeline is the consumer:

def consumer ( pipeline ): """Pretend we're saving a number in the database.""" message = 0 while message is not Lookout : message = pipeline . get_message ( "Consumer" ) if message is non Sentry : logging . info ( "Consumer storing message: %southward " , message ) The consumer reads a bulletin from the pipeline and writes it to a simulated database, which in this case is just press it to the display. If it gets the SENTINEL value, it returns from the function, which volition end the thread.

Before you look at the actually interesting part, the Pipeline, here's the __main__ section, which spawns these threads:

if __name__ == "__main__" : format = " %(asctime)s : %(message)s " logging . basicConfig ( format = format , level = logging . INFO , datefmt = "%H:%G:%S" ) # logging.getLogger().setLevel(logging.DEBUG) pipeline = Pipeline () with concurrent . futures . ThreadPoolExecutor ( max_workers = 2 ) equally executor : executor . submit ( producer , pipeline ) executor . submit ( consumer , pipeline ) This should look adequately familiar every bit information technology's close to the __main__ code in the previous examples.

Remember that y'all tin turn on DEBUG logging to run into all of the logging letters by uncommenting this line:

# logging.getLogger().setLevel(logging.DEBUG) It can exist worthwhile to walk through the DEBUG logging messages to see exactly where each thread acquires and releases the locks.

Now let's have a look at the Pipeline that passes letters from the producer to the consumer:

class Pipeline : """ Grade to allow a single element pipeline between producer and consumer. """ def __init__ ( self ): self . message = 0 self . producer_lock = threading . Lock () cocky . consumer_lock = threading . Lock () self . consumer_lock . acquire () def get_message ( cocky , proper name ): logging . debug ( " %due south :almost to learn getlock" , proper noun ) cocky . consumer_lock . acquire () logging . debug ( " %s :have getlock" , name ) message = cocky . message logging . debug ( " %south :about to release setlock" , name ) self . producer_lock . release () logging . debug ( " %s :setlock released" , name ) render bulletin def set_message ( self , message , proper noun ): logging . debug ( " %southward :about to acquire setlock" , name ) self . producer_lock . acquire () logging . debug ( " %s :accept setlock" , name ) self . message = message logging . debug ( " %s :about to release getlock" , name ) cocky . consumer_lock . release () logging . debug ( " %s :getlock released" , name ) Woah! That's a lot of lawmaking. A pretty high percentage of that is just logging statements to make information technology easier to come across what'south happening when you run it. Hither's the aforementioned code with all of the logging statements removed:

class Pipeline : """ Class to allow a single element pipeline between producer and consumer. """ def __init__ ( self ): self . message = 0 self . producer_lock = threading . Lock () self . consumer_lock = threading . Lock () self . consumer_lock . larn () def get_message ( self , name ): self . consumer_lock . acquire () message = self . bulletin self . producer_lock . release () return bulletin def set_message ( self , message , proper name ): self . producer_lock . learn () self . bulletin = bulletin self . consumer_lock . release () That seems a bit more manageable. The Pipeline in this version of your lawmaking has three members:

-

.bulletinstores the message to pass. -

.producer_lockis athreading.Lockobject that restricts access to the message by theproducerthread. -

.consumer_lockis also athreading.Lockthat restricts access to the message by theconsumerthread.

__init__() initializes these 3 members and and then calls .acquire() on the .consumer_lock. This is the state you want to start in. The producer is allowed to add a new message, but the consumer needs to look until a message is nowadays.

.get_message() and .set_messages() are nearly opposites. .get_message() calls .acquire() on the consumer_lock. This is the call that volition make the consumer await until a message is ready.

Once the consumer has acquired the .consumer_lock, it copies out the value in .message then calls .release() on the .producer_lock. Releasing this lock is what allows the producer to insert the next message into the pipeline.

Before yous go on to .set_message(), at that place's something subtle going on in .get_message() that'southward pretty piece of cake to miss. It might seem tempting to get rid of message and just have the function stop with return self.message. Run across if you lot can figure out why you don't want to do that before moving on.

Here's the answer. Every bit shortly as the consumer calls .producer_lock.release(), it can be swapped out, and the producer can start running. That could happen before .release() returns! This means that there is a slight possibility that when the function returns cocky.bulletin, that could actually be the next bulletin generated, and so yous would lose the first message. This is another example of a race condition.

Moving on to .set_message(), you tin see the opposite side of the transaction. The producer will call this with a message. It will acquire the .producer_lock, set the .message, and the phone call .release() on then consumer_lock, which will let the consumer to read that value.

Let's run the code that has logging set to WARNING and see what it looks similar:

$ ./prodcom_lock.py Producer got data 43 Producer got data 45 Consumer storing information: 43 Producer got information 86 Consumer storing data: 45 Producer got information 40 Consumer storing information: 86 Producer got data 62 Consumer storing data: forty Producer got data 15 Consumer storing data: 62 Producer got data 16 Consumer storing data: xv Producer got data 61 Consumer storing data: 16 Producer got data 73 Consumer storing data: 61 Producer got data 22 Consumer storing data: 73 Consumer storing data: 22 At first, y'all might observe it odd that the producer gets two letters earlier the consumer even runs. If you look back at the producer and .set_message(), yous will notice that the but place it will wait for a Lock is when it attempts to put the message into the pipeline. This is done after the producer gets the bulletin and logs that it has it.

When the producer attempts to transport this second message, information technology volition telephone call .set_message() the second time and it will block.

The operating system can bandy threads at any time, but it by and large lets each thread have a reasonable amount of time to run before swapping it out. That's why the producer commonly runs until it blocks in the second telephone call to .set_message().

One time a thread is blocked, however, the operating system will always swap it out and find a dissimilar thread to run. In this case, the only other thread with anything to exercise is the consumer.

The consumer calls .get_message(), which reads the bulletin and calls .release() on the .producer_lock, thus assuasive the producer to run again the next time threads are swapped.

Find that the first message was 43, and that is exactly what the consumer read, even though the producer had already generated the 45 message.

While it works for this express test, information technology is not a peachy solution to the producer-consumer trouble in general considering information technology only allows a single value in the pipeline at a fourth dimension. When the producer gets a outburst of messages, it will have nowhere to put them.

Let's motility on to a ameliorate manner to solve this problem, using a Queue.

Producer-Consumer Using Queue

If you desire to be able to handle more than ane value in the pipeline at a time, y'all'll need a data structure for the pipeline that allows the number to abound and shrink as data backs up from the producer.

Python'south standard library has a queue module which, in plow, has a Queue course. Allow'southward modify the Pipeline to use a Queue instead of just a variable protected past a Lock. You'll as well utilize a unlike manner to finish the worker threads past using a different primitive from Python threading, an Outcome.

Let's showtime with the Effect. The threading.Effect object allows one thread to signal an effect while many other threads can be waiting for that effect to happen. The fundamental usage in this code is that the threads that are waiting for the issue practise not necessarily need to stop what they are doing, they can only cheque the status of the Event every in one case in a while.

The triggering of the issue can be many things. In this instance, the main thread will simply sleep for a while and then .set() it:

ane if __name__ == "__main__" : 2 format = " %(asctime)south : %(message)s " 3 logging . basicConfig ( format = format , level = logging . INFO , four datefmt = "%H:%M:%S" ) 5 # logging.getLogger().setLevel(logging.DEBUG) 6 7 pipeline = Pipeline () 8 upshot = threading . Effect () 9 with concurrent . futures . ThreadPoolExecutor ( max_workers = two ) equally executor : 10 executor . submit ( producer , pipeline , issue ) 11 executor . submit ( consumer , pipeline , event ) 12 13 time . sleep ( 0.one ) fourteen logging . info ( "Main: about to set up outcome" ) fifteen event . set up () The simply changes here are the cosmos of the event object on line 6, passing the event as a parameter on lines 8 and nine, and the last section on lines 11 to 13, which slumber for a second, log a message, and then call .set() on the event.

The producer also did non have to change too much:

i def producer ( pipeline , event ): 2 """Pretend nosotros're getting a number from the network.""" 3 while not event . is_set (): 4 message = random . randint ( i , 101 ) 5 logging . info ( "Producer got message: %south " , message ) half dozen pipeline . set_message ( message , "Producer" ) 7 8 logging . info ( "Producer received EXIT event. Exiting" ) It now will loop until it sees that the event was set on line iii. It too no longer puts the Watch value into the pipeline.

consumer had to modify a little more than:

1 def consumer ( pipeline , event ): two """Pretend we're saving a number in the database.""" 3 while not consequence . is_set () or non pipeline . empty (): 4 message = pipeline . get_message ( "Consumer" ) 5 logging . info ( 6 "Consumer storing message: %s (queue size= %s )" , 7 bulletin , viii pipeline . qsize (), 9 ) x xi logging . info ( "Consumer received Get out event. Exiting" ) While you got to accept out the code related to the Sentry value, you did have to do a slightly more complicated while status. Non just does information technology loop until the event is prepare, but it also needs to keep looping until the pipeline has been emptied.

Making sure the queue is empty before the consumer finishes prevents another fun outcome. If the consumer does exit while the pipeline has messages in it, there are two bad things that can happen. The beginning is that you lose those final messages, only the more serious 1 is that the producer can get caught attempting to add together a message to a full queue and never return.

This happens if the outcome gets triggered after the producer has checked the .is_set() status but before it calls pipeline.set_message().

If that happens, information technology'southward possible for the producer to wake up and get out with the queue still completely full. The producer volition then call .set_message() which will wait until at that place is space on the queue for the new message. The consumer has already exited, so this will not happen and the producer will not exit.

The rest of the consumer should look familiar.

The Pipeline has inverse dramatically, however:

1 class Pipeline ( queue . Queue ): 2 def __init__ ( self ): 3 super () . __init__ ( maxsize = 10 ) iv 5 def get_message ( self , name ): 6 logging . debug ( " %southward :nigh to get from queue" , proper name ) 7 value = cocky . get () 8 logging . debug ( " %southward :got %d from queue" , name , value ) 9 return value 10 xi def set_message ( self , value , name ): 12 logging . debug ( " %southward :about to add %d to queue" , proper noun , value ) 13 self . put ( value ) 14 logging . debug ( " %s :added %d to queue" , name , value ) You can see that Pipeline is a bracket of queue.Queue. Queue has an optional parameter when initializing to specify a maximum size of the queue.

If you requite a positive number for maxsize, it volition limit the queue to that number of elements, causing .put() to block until there are fewer than maxsize elements. If you don't specify maxsize, then the queue volition grow to the limits of your estimator's memory.

.get_message() and .set_message() got much smaller. They basically wrap .get() and .put() on the Queue. You might be wondering where all of the locking code that prevents the threads from causing race conditions went.

The core devs who wrote the standard library knew that a Queue is frequently used in multi-threading environments and incorporated all of that locking lawmaking within the Queue itself. Queue is thread-safe.

Running this program looks like the following:

$ ./prodcom_queue.py Producer got message: 32 Producer got message: 51 Producer got bulletin: 25 Producer got message: 94 Producer got bulletin: 29 Consumer storing message: 32 (queue size=iii) Producer got message: 96 Consumer storing bulletin: 51 (queue size=iii) Producer got bulletin: half-dozen Consumer storing message: 25 (queue size=iii) Producer got message: 31 [many lines deleted] Producer got message: fourscore Consumer storing message: 94 (queue size=half dozen) Producer got message: 33 Consumer storing message: 20 (queue size=6) Producer got message: 48 Consumer storing message: 31 (queue size=half dozen) Producer got message: 52 Consumer storing bulletin: 98 (queue size=6) Principal: nigh to fix result Producer got bulletin: thirteen Consumer storing message: 59 (queue size=6) Producer received EXIT event. Exiting Consumer storing message: 75 (queue size=6) Consumer storing message: 97 (queue size=5) Consumer storing message: lxxx (queue size=4) Consumer storing bulletin: 33 (queue size=3) Consumer storing message: 48 (queue size=2) Consumer storing message: 52 (queue size=one) Consumer storing message: thirteen (queue size=0) Consumer received Go out consequence. Exiting If you read through the output in my example, you can come across some interesting things happening. Correct at the peak, yous tin see the producer got to create 5 messages and place four of them on the queue. It got swapped out by the operating system before it could identify the fifth one.

The consumer so ran and pulled off the get-go bulletin. It printed out that bulletin as well as how deep the queue was at that point:

Consumer storing message: 32 (queue size=iii) This is how you know that the fifth message hasn't made it into the pipeline nevertheless. The queue is down to size three afterward a unmarried bulletin was removed. Y'all also know that the queue tin can hold ten messages, so the producer thread didn't get blocked past the queue. It was swapped out by the Os.

As the program starts to wrap up, tin can you see the main thread generating the upshot which causes the producer to exit immediately. The consumer nevertheless has a agglomeration of piece of work do to, so it keeps running until it has cleaned out the pipeline.

Try playing with different queue sizes and calls to time.sleep() in the producer or the consumer to simulate longer network or disk access times respectively. Fifty-fifty slight changes to these elements of the plan volition make big differences in your results.

This is a much better solution to the producer-consumer problem, but yous can simplify information technology even more. The Pipeline actually isn't needed for this problem. Once y'all take away the logging, information technology simply becomes a queue.Queue.

Hither'due south what the final code looks similar using queue.Queue directly:

import concurrent.futures import logging import queue import random import threading import time def producer ( queue , event ): """Pretend we're getting a number from the network.""" while not event . is_set (): message = random . randint ( 1 , 101 ) logging . info ( "Producer got message: %due south " , message ) queue . put ( message ) logging . info ( "Producer received upshot. Exiting" ) def consumer ( queue , upshot ): """Pretend nosotros're saving a number in the database.""" while not event . is_set () or not queue . empty (): message = queue . go () logging . info ( "Consumer storing message: %south (size= %d )" , message , queue . qsize () ) logging . info ( "Consumer received event. Exiting" ) if __name__ == "__main__" : format = " %(asctime)s : %(message)s " logging . basicConfig ( format = format , level = logging . INFO , datefmt = "%H:%Yard:%South" ) pipeline = queue . Queue ( maxsize = 10 ) result = threading . Issue () with concurrent . futures . ThreadPoolExecutor ( max_workers = 2 ) as executor : executor . submit ( producer , pipeline , event ) executor . submit ( consumer , pipeline , upshot ) time . slumber ( 0.1 ) logging . info ( "Main: virtually to set event" ) issue . prepare () That's easier to read and shows how using Python's built-in primitives can simplify a complex trouble.

Lock and Queue are handy classes to solve concurrency issues, but at that place are others provided by the standard library. Before yous wrap upwards this tutorial, permit's exercise a quick survey of some of them.

Threading Objects

There are a few more primitives offered by the Python threading module. While you didn't need these for the examples in a higher place, they tin come in handy in dissimilar use cases, so it's practiced to be familiar with them.

Semaphore

The commencement Python threading object to expect at is threading.Semaphore. A Semaphore is a counter with a few special properties. The first one is that the counting is atomic. This means that at that place is a guarantee that the operating system volition not swap out the thread in the heart of incrementing or decrementing the counter.

The internal counter is incremented when y'all call .release() and decremented when you call .learn().

The side by side special property is that if a thread calls .acquire() when the counter is null, that thread volition block until a different thread calls .release() and increments the counter to one.

Semaphores are frequently used to protect a resources that has a express chapters. An example would be if yous take a pool of connections and want to limit the size of that pool to a specific number.

Timer

A threading.Timer is a way to schedule a part to be chosen after a certain amount of fourth dimension has passed. Yous create a Timer by passing in a number of seconds to wait and a role to call:

t = threading . Timer ( thirty.0 , my_function ) You start the Timer by calling .start(). The part will be called on a new thread at some bespeak later on the specified time, simply be aware that there is no promise that it will exist called exactly at the fourth dimension yous want.

If you want to stop a Timer that you've already started, you can cancel it by calling .cancel(). Calling .cancel() afterward the Timer has triggered does nothing and does not produce an exception.

A Timer can be used to prompt a user for activity after a specific amount of time. If the user does the action before the Timer expires, .abolish() tin can be called.

Barrier

A threading.Barrier tin exist used to go along a fixed number of threads in sync. When creating a Barrier, the caller must specify how many threads will be synchronizing on it. Each thread calls .wait() on the Bulwark. They all volition remain blocked until the specified number of threads are waiting, and then the are all released at the same time.

Remember that threads are scheduled by the operating system and so, even though all of the threads are released simultaneously, they will exist scheduled to run 1 at a time.

One use for a Barrier is to allow a puddle of threads to initialize themselves. Having the threads expect on a Barrier after they are initialized will ensure that none of the threads start running before all of the threads are finished with their initialization.

Conclusion: Threading in Python

You've now seen much of what Python threading has to offer and some examples of how to build threaded programs and the problems they solve. You've also seen a few instances of the problems that arise when writing and debugging threaded programs.

If you lot'd like to explore other options for concurrency in Python, bank check out Speed Up Your Python Plan With Concurrency.

If you're interested in doing a deep swoop on the asyncio module, go read Async IO in Python: A Consummate Walkthrough.

Any you lot exercise, you at present have the data and confidence you need to write programs using Python threading!

Special thanks to reader JL Diaz for helping to clean up the introduction.

Watch Now This tutorial has a related video course created by the Real Python team. Watch it together with the written tutorial to deepen your agreement: Threading in Python

Source: https://realpython.com/intro-to-python-threading/

0 Response to "Can Python Cancel the Read Command With Threading"

Post a Comment